Current Projects

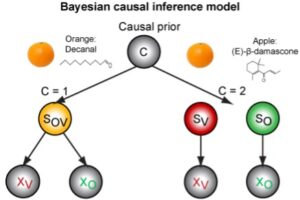

Olfactory-visual causal inference in perception and the brain (OLVICI; RO 5587/1-1)

Humans integrate sensory stimuli from the physical (e.g. sight, hearing, touch) and chemical senses (e.g. smell, taste) into a coherent multisensory perception of their environment and evaluate it. For example, we perceive food based on its appearance and odour and evaluate whether we like a food or not. However, our brain should only integrate and evaluate multisensory stimuli that can originate from a common cause: When seeing and smelling food, the brain must first infer whether the appearance and smell were actually caused by a single food, and not by different foods or other odour sources. This project uses psychophysical, psychophysiological and EEG methods to investigate how humans infer the causal structure of olfactory-visual food stimuli, integrate or segregate the stimuli and finally evaluate their multisensory pleasantness. The results will significantly expand our understanding of how the brain forms, evaluates and expresses the multisensory perception of food.

Duration of the project: 2023-2026

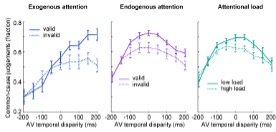

The interplay of multisensory causal inference and attention in audiovisual perception (MultiAttend, RO 5587/5-1)

In our everyday environment, our brain constantly combines a multitude of multisensory stimuli into a coherent multisensory perception of the environment. For example, we correctly perceive which voices belong to which faces at a party. In order to link the stimuli veridically, the brain should only combine stimuli from one source (such as a speaker). However, the brain only has limited attentional capacities to process the multisensory stimuli and to focus on relevant stimuli (e.g. the conversation partner). The brain therefore has to solve two challenges: First, it must infer the causal structure of the multisensory stimuli in order to integrate them in the case of a common cause or to segregate them in the case of independent causes. Second, the brain must use selective attention to focus its limited attentional resources to relevant stimuli in the competition of multisensory stimuli. The current project investigates the interplay of multisensory causal inference and attention in audiovisual perception using psychophysics, electroencephalography (EEG) and Bayesian computational modelling.

Project duration: 2024-2027

The influence of physical activity on rumination

Repeated and persistent rumination (brooding) is a major symptom of depressive disorders, while at the same time physical activity shows positive effects on depressive symptoms. In cooperation with the University of Tübingen, the project is investigating whether the positive effect of physical activity is mediated by a reduction in rumination. In an EGG study with depressive individuals, we develop a decoder that recognises ruminative states from neurophysiological EEG activity patterns. Thus, we can demonstrate how physical activity reduces rumination that we decode online from neurophysiological data.

Project duration: 2021-2025

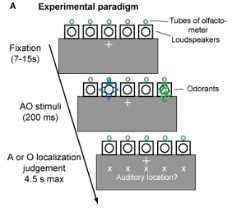

The integration of auditory-olfactory signals in spatial perception

In addition to spatial vision and hearing, humans can also spatially smell the location of external odour sources, similar to many animals (e.g. dogs). It is unclear whether humans combine spatial olfaction with spatial vision or hearing. In this psychophysical study, we investigate whether our brain integrates spatial odour stimuli (olfactory stimuli) with auditory spatial stimuli. The project is funded by the Special Fund for Scientific Work at FAU.

Project duration: 2023-2024